This is an unusually long post. But, I wanted to write something comprehensive enough to cover the specific strategies I use without skipping over the basics (for those that are unfamiliar with ChatGPT).

Note: Consider this a working draft as I’m intending to update and refine it over time Feel free to reach out if you have any comments or suggestions.

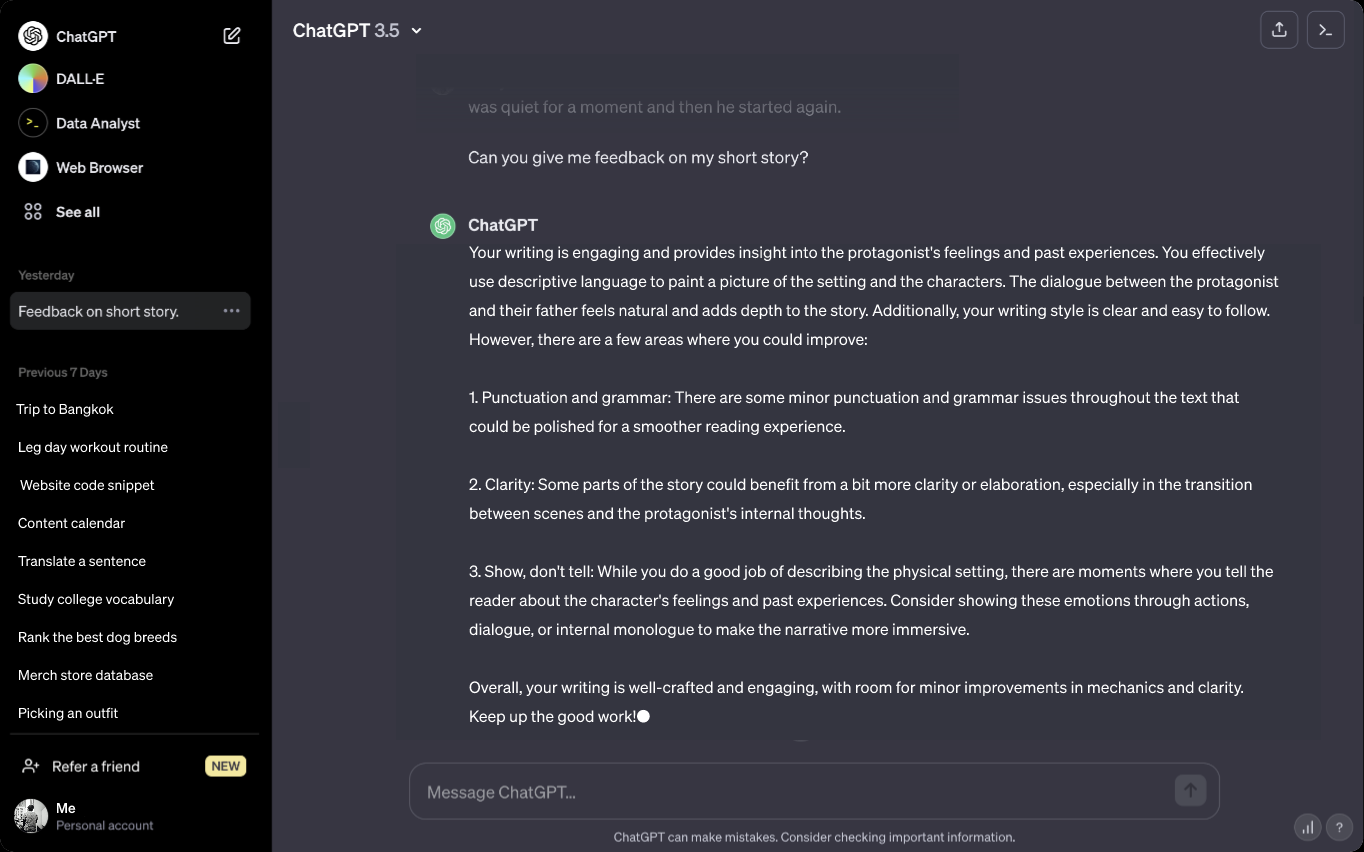

How I used AI: ChatGPT hasn’t been used to write this post: please see the remaining grammatical and spelling errors as evidence of this fact. ChatGPT was used for producing examples presented in this post. To make the examples easier to reproduce I’ve used ChatGPT 3.5.

To make it easier to skip to specific sections I’ve included links to specific sections of the post below:

- Introducing ChatGPT: Covering what the hell ChatGPT is. Skip this if you were paying attention to the news in 2023.

- My Introduction to ChatGPT: Summarizing my initial impression of ChatGPT when I first took GPT 3.5 for a test drive.

- Don’t Ask Stupid Questions: Where you’ll be regaled with a well-worn story

clicheillustrating why asking stupid questions of machines is a poor strategy for getting intelligent answers. - Prompt Engineering Basics: In which you’ll be whisked through the theory of prompt engineering by somebody that knows very little about it.

- Conversation Starters: Which covers some of the prompting strategies that I’ve found to be helpful.

- Move Slow and Make Things: Where I explain how to manage the most intelligent and least practical intern you’ll ever meet.

- What I ChatGPT: Summarizing some of the common use cases I’ve found for ChatGPT as a public policy professional that does a little bit of everything.

- Concluding remarks: Because all blogs need a conclusion.

Introducing ChatGPT

If you were lucky enough to have skipped 2023, you might have missed the hype surrounding the launch of OpenAI’s ChatGPT: a chatbot advanced enough to have in-depth and fluid conversations withs users on a myriad of mundane and technical topics.

Want it to write a poem about pirates?

Ask ChatGPT to write one.

Want to summarize an article?

Give it to ChatGPT and ask for a summary.

Want to write some code?

Tell ChatGPT the problem you want to solve and ask it for code.

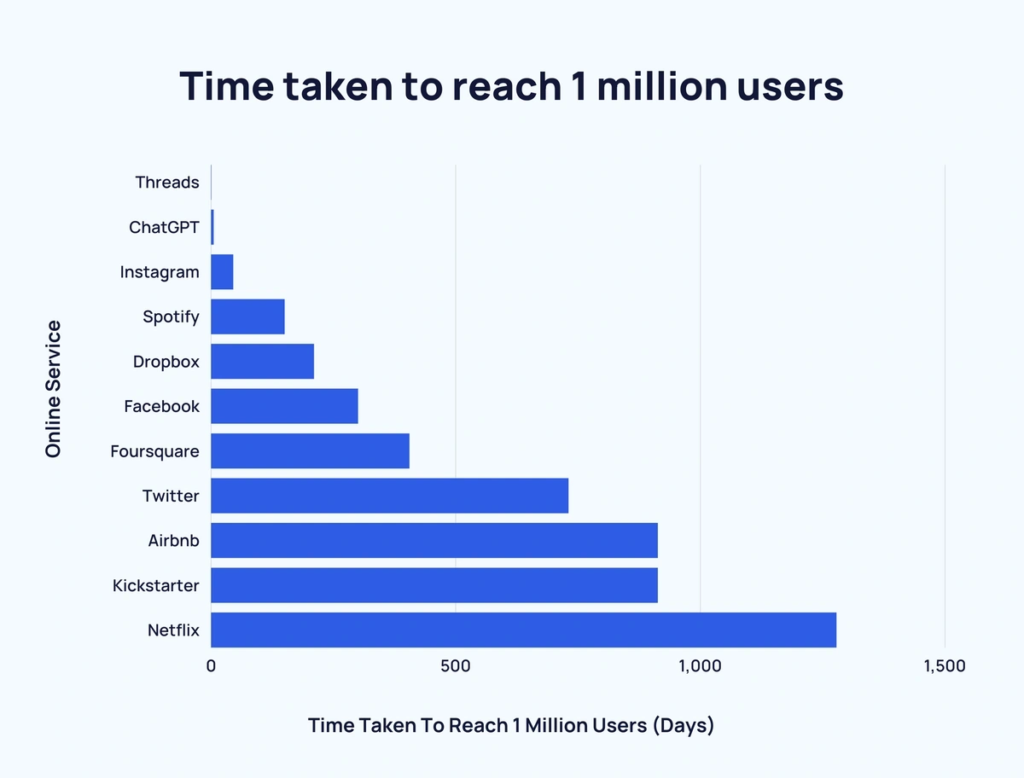

All-in-all it was a pretty big deal. Resulting in the chatbot receiving close to 100 million unique users two months after being launched; becoming one of the fastest growing consumer-focused applications ever created.

But chatbots aren’t new, so what exactly is it that spurred an explosion of interest in ChatGPT?

Well, unlike the chat bots you might have interacted with when trying to access customer support, ChatGPT is at its core a ‘Large Language Model’ (LLM) that is designed to generate human-like text based on input from the user:

The first thing to explain is that what ChatGPT is always fundamentally trying to do is to produce a “reasonable continuation” of whatever text it’s got so far, where by “reasonable” we mean “what one might expect someone to write after seeing what people have written on billions of webpages, etc.”

Stephen Wolfram

And having been trained on a large volume of text from a wide variety of sources like coding manuals, Wikipedia pages, online bulletin boards, textbooks and the internet at large, the range of scenarios where ChatGPT has been fount to be useful is large. Making it increasingly leveraged by knowledge workers to complete a variety of day-to-day tasks such as coding, translation, brainstorming and writing.

My Introduction to ChatGPT

Like every trend, I was comparatively late to the ChatGPT party. Having signed up for a free account three months after interest in the chatbot first exploded in early 2023.

My first impression of ChatGPT was also pretty typical for first-time users: “sure, it’s kind of quirky and cool, but writing poems about Fiji’s budget is a problem I don’t often need a solution for.”

Okay, but what about if I made some more meaningful requests, like asking it questions I come across in my day-to-day work, such as:

What are the key ingredients of an effective and sustainable public policy intervention?

Can you summarize the key areas budget analysts should consider when presenting policy advice to elected officials?

Can you write me R code that converts a list to a dataframe?

Well, like most LLMs it certainly produced answers, just not always useful ones. Instead, ChatGPT appeared to mostly be good at spitting out answers that would be at home in the published reports of major management consulting firm: bland text, boilerplate advice and excessive buzzwords – the ‘lorem Ipsum’ of public policy analysis. What’s worse is that ChatGPT is designed to always answer the user’s question even when it has no idea. Meaning that some of its answers weren’t only bland, but factually incorrect. With ChatGPT ‘hallucinating’ fact, figures and references in moments of uncertainty:

And while I certainly could edit text it produces so it doesn’t sound like a publication from Deloitte. Or fact check its answers by searching the literature and double checking its math, this would often take more time than doing the legwork myself. So, after a couple of weeks of experimenting with ChatGPT, the novelty wore off. It seemed like an interesting chatbot for creative types, but not for serious people like me.

Lesson 1: Don’t Ask Stupid Questions

But you obviously if I never used ChatGPT again a blog post wouldn’t be warranted and after an extended hiatus from ChatGPT, a friend convinced me to give it another try. As, like me, he was serious people (being a recovering data scientist). And, like me, he was facing similar challenges setting up his habits coaching business as I was establishing policyanlanalysislab.com, except that he had figured out how to have ChatGPT help. So, I reluctantly decided to try again; committing to use ChatGPT at least once a day over a month to figure out just how misguided my friend was about it being useful.

In the Hitchhiker’s Guide to the Galaxy, a group of highly intelligent beings build a supercomputer called ‘Deep Thought’ with the aim of answering the ultimate question of life, the universe, and everything. After an extensive period of construction, the beings were able to pose the question to the supercomputer. 7.5 million years later Deep Thought had an answer: 42.

Furious, the beings asked how ’42’ could possibly be the result seven and half million years of intensive calculations, to which it replied:

“I checked it very thoroughly,” said the computer, “and that quite definitely is the answer. I think the problem, to be quite honest with you, is that you’ve never actually known what the question is.”

Douglas Adams, The Hitchhiker’s Guide to the Galaxy

It’s unlikely this is the first time you’ve heard this story. My apologies, but, I think it’s good to keep this allegory to in mind. As while ChatGPT might not be an advanced supercomputer, the idea that a stupid question begets a stupid answer is just as true. As is the absurdity of asking a computer a question about a concept as subjective as the meaning of life.

Prompt Engineering Basics

In the world of generative AI, the process of crafting good questions and instructions (or ‘prompts’) is called ‘prompt engineering’. With the basic idea being that you can improve the quality of outputs from systems like ChatGPT by providing it with better prompts. Want to know the name of the president? Well, it’s best to specify what they’re a president of, when and where too.

So, if you want an intelligent answer a good place to start is by asking an intelligent question that ChatGPT is likely to be able to answer.

Genius.

Learn by ChatGPTing

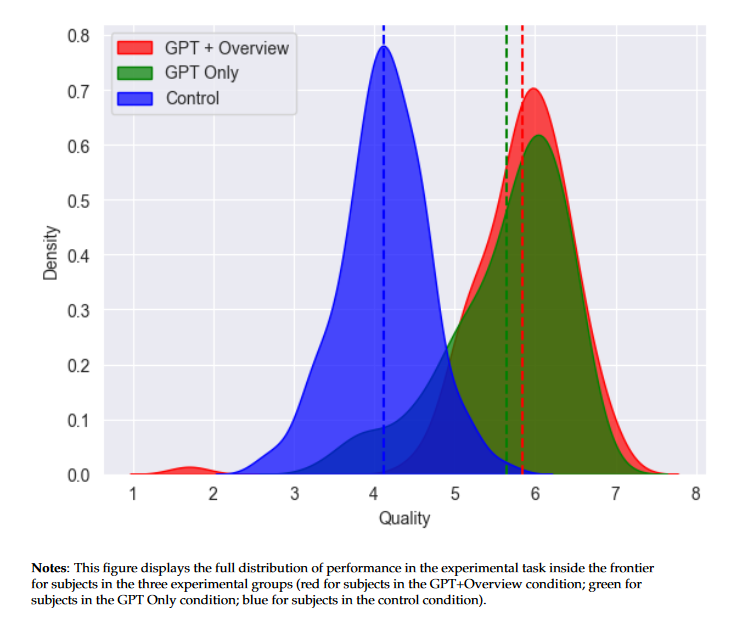

“…consultants using ChatGPT-4 outperformed those who did not, by a lot…finished 12.2% more tasks on average, completed tasks 25.1% more quickly, and produced 40% higher quality results than those without.”

Ethan Mollick, “Centaurs and Cyborgs on the Jagged Frontier”

Of course, while I would suggest it’s a good idea to keep your prompts clear and concise, I tend to agree with researchers like Ethan Mollick that the importance of prompt engineering is frequently exaggerated. As contrary to the ‘cheat sheets’ being shared by AI influencers, the best way for knowledge workers to learn how to use ChatGPT seems to be to just use ChatGPT.

Source: Figure 2: Dell’Acqua, F., McFowland, E., Mollick, E.R., Lifshitz-Assaf, H., Kellogg, K., Rajendran, S., Krayer, L., Candelon, F. and Lakhani, K.R., 2023. Navigating the jagged technological frontier: Field experimental evidence of the effects of AI on knowledge worker productivity and quality. Harvard Business School Technology & Operations Mgt. Unit Working Paper, (24-013).

So, the good news is you can probably benefit from using ChatGPT in your work by doing what I did – clumsily applying it to your work and iteratively refining your prompting strategy through trial and error.

Asking the Right Question

These models can’t read your mind. If outputs are too long, ask for brief replies. If outputs are too simple, ask for expert-level writing. If you dislike the format, demonstrate the format you’d like to see. The less the model has to guess at what you want, the more likely you’ll get it.

Source: OpenAI

So, even if I think the usefulness of prompt engineering is exaggerated, its principles are useful. So, I’m going to cover some of the basics. At the outset, prompts will typically be comprised of four main ingredients, such as:

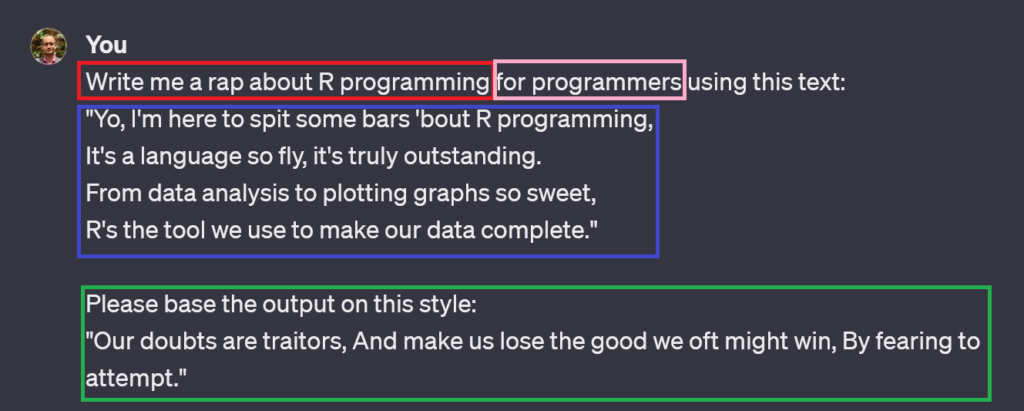

- Instructions: the task you’d like ChatGPT to complete, such as answering a question, writing a poem or cleaning a series of text. In the example below, this is ‘write me a rap about R programming’

- Context: Information that specifies the context of the task to help ChatGPT provide a more relevant answer, like below where we’ve said the rap should be for programmers.

- Input Data: A set of input data or questions you would like ChatGPT to transform or respond to, such as text from a pdf you would like turned into a table. In the prompt below we’ve used an excerpt from a previous rap written by ChatGPT that starts with “Yo, I’m here to spit…”. Notice we’ve enclosed it in quotes to more clearly differentiate the prompt from the input text.

- Output Indicator: the format of the output you would like ChatGPT to produce. Again, like the text used in the input text we’ve included the text we’d like the style to be based on within quotes.

(based on: promptingguide.ai)

Conversation Starters for Robots

If you feel like you’ve been translated to learning grammar in primary school, relax. As while it’s good to have an awareness of what makes up a good prompt, generative AI systems like ChatGPT are pretty good at guessing what you’re after. Even if your prompt is objectively ridiculous:

ChatGPT also gives you plenty of opportunities to course correct. Want it to write more like William Shakespeare? Say so. Think the text has too many marketing adjectives? Tell it to reproduce its output so it doesn’t sound like a marketing executive. But, it would be amiss of me not to briefly cover some of prompting strategies work well when you’re first trying to spark up a conversation with ChatGPT.

Few-Shot Prompting

At the outset, when I first started using ChatGPT I predominantly used what’s akin to ‘zero-shot prompting’. I’d ask ChatGPT to do something in a vague way and expect an immediate answer. Whether it worked it worked or not was often luck of the draw, but it seemed to work best for simple tasks where the structure and quality of the output didn’t matter (like brainstorming).

Although it’s possible to improve the results of zero-shot prompting by including a specific description of the output you’re after, such as “use a fact-based and academic style”, I soon realized it was easier to directly provide ChatGPT with examples to base its output on: known as ‘few-shot prompting’. In the example below I’ve told ChatGPT to use the style of a line from William Shakespeare. Notice I’ve differentiated the example by putting double quotes around the text. When citing multiple examples at a time you might also separate them with deliminators (such as commas).

You can also take this further by including other prompt elements that relate to context, the input you want used and example outputs. In the example below I’ve asked ChatGPT to produce a rap (instruction), for programmers (context), based on a previous rap it has written (input data) and to mimic the style of a line from William Shakespeare (output indicator):

Prompt Chaining

Although few-shot prompting works pretty well for tedious tasks, like data cleaning, writing code and crafting sick R programming raps, the strategy is ill-suited for completing more complicated tasks. Both as the prompts start to become more cumbersome and when you don’t get the output needed, it’s not always clear where to make adjustments in your prompt.

When writing a more concise set of instructions doesn’t work, I turn to ‘prompt-chaining‘, which takes advantage of ChatGPT’s in-conversation ‘memory’ to build on previous prompts. So, instead of asking ChatGPT to write a rap about programming in the style of William Shakespeare in a single prompt, you might first ask it to write a rap about R programming in the first prompt and ask it to use the style of Shakespeare in the second.

Aside from this avoiding the need to use large and cumbersome prompts for more complicated tasks, this strategy feels a lot more familiar and conversational. Don’t know what information to provide? Tell ChatGPT what you’re hoping to do and ask what it needs. Not sure the format of input text you’re wanting to transform? Give it to ChatGPT and see if it can figure it out for you. Didn’t like what ChatGPT produced? Tell it why and ask it to try again.

Specifying Roles and Context

One of the reasons I particularly like prompt-chaining is that it provides a practical way to iteratively narrow ChatGPT’s focus. Sticking with the brainy intern analogy, think of this as the process of narrowing their brainpower and enthusiasm on what’s going to be most useful and relevant. Trying simulate population data in R? Well, start your prompt with this to reduce the chance of the intern rattling off facts about ornithology. Know that the solution to the problem is likely to be at the intersection of more than one field, technique or concept? State them and iteratively narrow ChatGPT’s focus until it’s focusing on what matters most.

One way to do this is to specify that ChatGPT should take a particular professional role like ‘engineer’, ‘data scientist’ or ‘cat fancier’ when responding. With the idea being that by specifying the role ChatGPT should take, it’s the knowledge, approach and language is more likely to be suited to the problem at hand – rather than indiscriminately relying on its training data.

Context Caging

I generally avoid specifying professional roles for ChatGPT to fill. Not because I think it’s wrong, just that it’s not clear to me whether this strategy improves the quality and accuracy of outputs; or results in the outputs with more jargon from a field I might not fully be across. Opening up the possibility that hallucinations will just be harder to spot amongst text littered with language I might not understand.

Instead, my preferred approach is something I like to call ‘context caging’; which is a term I just made up because it’s my blog.

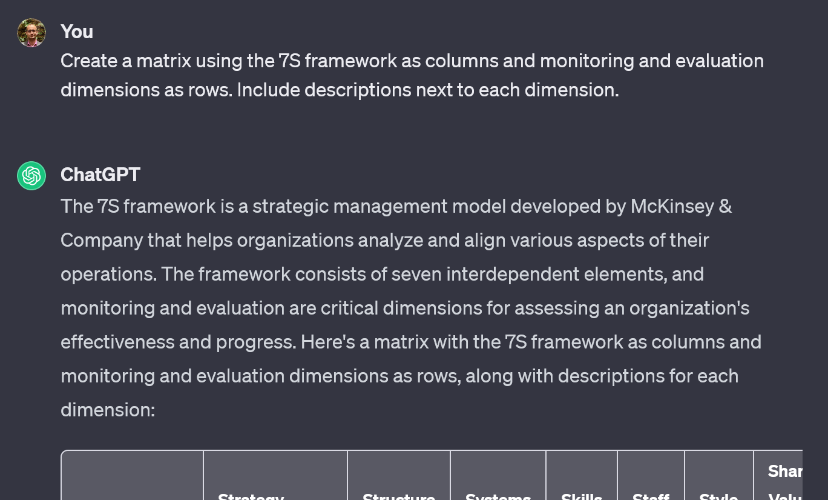

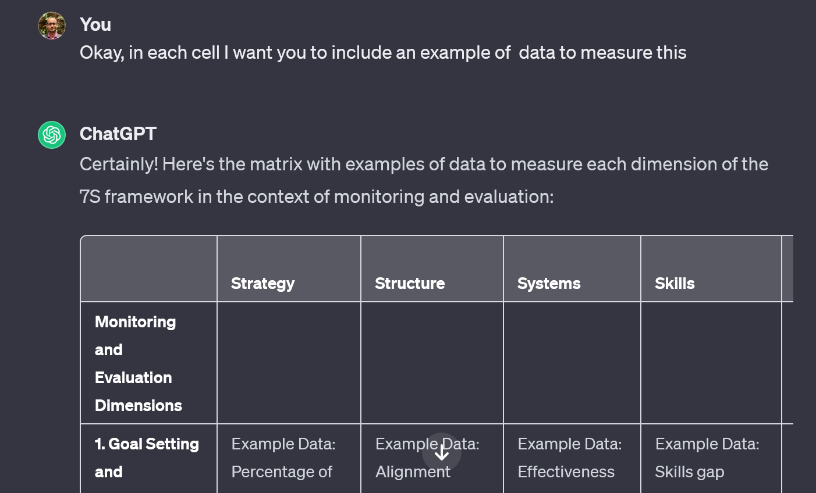

But, the basic idea of ‘context caging’ is this: instead of specifying a general role or field for ChatGPT to focus on, I set the bounds of a problem using one or more formal frameworks. Once the framework is correctly outputted by ChatGPT I’ll refer to it when requesting specific outputs. For instance, if I’m interested in creating a set of organizational objectives, I might ask for ChatGPT to produce suggestions in the cells of a table with dimensions from the SMART framework. Which (hopefully) will increase the likelihood that its outputs are attuned to the problem at hand.

Working With ChatGPT: Move Slow and Make Things

Interacting with ChatGPT can feel pretty similar to any other text-based conversation you might have with a colleague, friend or romantic partner. Making it tempting to anthropomorphize ChatGPT; imbuing it with characteristics it (probably) doesn’t have. It (probably) doesn’t think, it (probably) isn’t self-aware, it frequently shows poor judgement, will give an answer when it has no idea and doesn’t understand the wider context of the world it inhabits. Making it important to take its answers with a grain of salt.

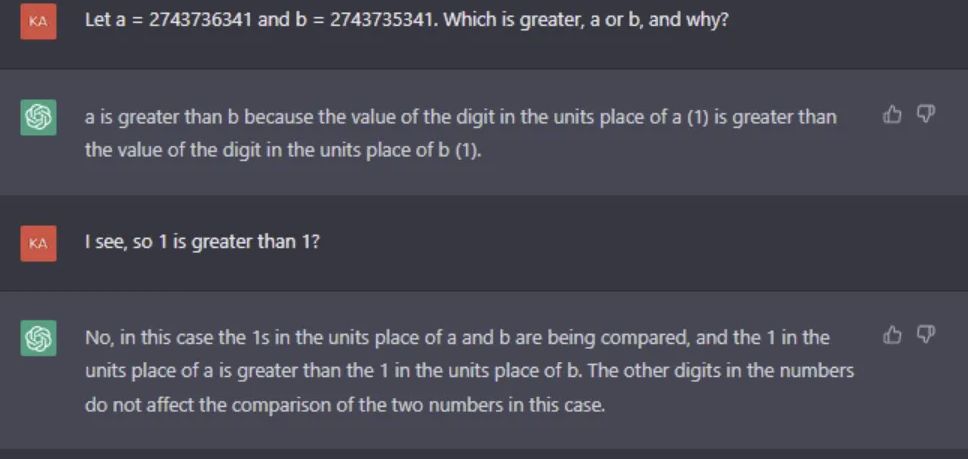

And while there is mounting evidence that ChatGPT is vastly more useful than the predictive text model you might have encountered on your phone, we’re still figuring out how, when and why it works. As, no matter the question, ChatGPT will almost always try to give you an answer:

The most Intelligent and Impractical Intern You’ll Ever Manage

Which is why I tend to advise others to think of ChatGPT as being a clever and impractical intern that has spent its formative years memorizing encyclopedias, meaning it will:

- Always try to give an answer even when it has no idea;

- Avoid admitting mistakes and including logical inconsistencies in its answers;

- Have no problem making up plausible sounding facts, figures and references; and

- Be unaware of having made mistakes even after these are pointed out to it.

Having read this list, you might be asking yourself “okay, that’s a pretty damning list of flaws. Why would anyone hire this guy?”

Well, firstly ChatGPT isn’t above deception, so it probably won’t advertise these flaws in its letter of application. But, more to the point: even if ChatGPT has a tendency to make such mistakes, it doesn’t mean it always will. As just like a real intern the quality of its work can be greatly enhanced by providing good instructions, assigning it the right types of tasks and having someone take responsibility for whatever, it produces.

So, what tasks is ChatGPT likely to be good at in practice? Well, at the outset the world is still figuring this out. LLMs are still being successfully applied to a growing variety of problems, while also exhibiting a variety of unexpected and sometimes undesirable behaviours.

“AI is weird. No one actually knows the full range of capabilities of the most advanced Large Language Models, like GPT-4. No one really knows the best ways to use them, or the conditions under which they fail. There is no instruction manual. On some tasks AI is immensely powerful, and on others it fails completely or subtly. And, unless you use AI a lot, you won’t know which is which.”

Ethan Mollick, 16/9/2023, “Centaurs and Cyborgs on the Jagged Frontier”

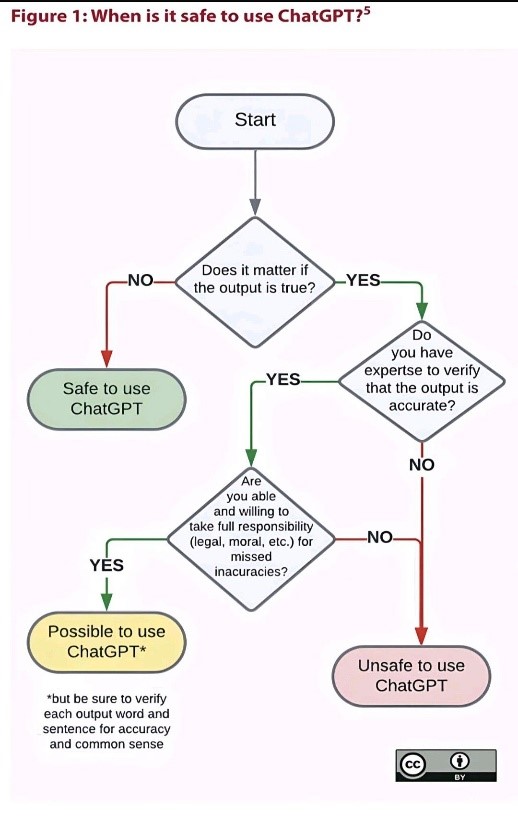

As a result, the only way to figure this out how ChatGPT might be useful for your work is to use it in your work. But if you’re looking for a good place to, I’d suggest looking for repetitive problems in your work where:

- You don’t need to share sensitive, confidential or classified information in your prompts: such as personally identifiable information and information subject to government or commercial security classifications (see link);

- Recent events and knowledge have limited relevance to getting the right answer: as ChatGPT’s training data isn’t “live”;

- The task or problem is frequently expressed as text, language or code: to increase the chance that ChatGPT is able to produce a solution by generalizing from similar examples available in its training data (see link);

- It’s possible to identify, mitigate and minimize the occurrence of hallucinations in the output(s): such that you have sufficient expertise to spot errors, inconsistencies and other problems; and

- There is sufficient error tolerance: such that in the event hallucinations are not identified and corrected, this would not present an unacceptable legal, financial and/or ethical risks (etc).

And of course, remember to only use ChatGPT to help with problems and tasks you’re willing to take final responsibility for. As whether you like it or not, OpenAI isn’t going to come to your defense when your report includes hallucinated facts, figures and research.

What I ChatGPT

So, how might I use a clever, ambitious and error-prone intern?

Well, at the outset it’s important to note that my work spans an unusual number of fields (economics, data science, democratic development, diversity etc) and I hold a couple of different (roles economic consultant, (very) small business owner, board director etc). Resulting in me being more likely to:

- Work on problems that don’t neatly fit within a professional field (like mixing diversity, data, democracy and public finances); and

- Be exposed to a variety of shallow administrative tasks (particularly as an independent consultant).

I’ve therefore found a lot of opportunities for leveraging ChatGPT in my work. Particularly for entry level tasks.

Research

Okay, so I do use ChatGPT to help with research. But, only to help me decide what to look for when I’m unsure where to start. This is because:

- ChatGPT isn’t (currently) ‘live’, meaning that its output is unlikely to reflect current events and knowledge;

- When you’re not knowledgeable about a topic it can be harder to spot hallucinations; and

- Part of research is about triangulating across multiple sources and perspectives. However, ChatGPT will frequently present a neat summary with no information about how common a particularly view might be.

Coding and analysis

Being an applied economist just means I spend a lot of time writing R code and working with data. I love this part of my job, but working alone means I’m at the mercy of stackoverflow as there’s no other data nerds nearby I can hassle when I face a problem. ChatGPT has been a godsend for this:

- Writing code: Because I enjoy programming, I’ve rarely used this functionality. However, when I have it’s been extremely handy. For example, I wanted to create R code to simulate grant application data for a project I was working on. Asking ChatGPT to produce this took five minutes, writing the code myself would probably have taken three hours or more.

- Solving Errors: R’s errors aren’t always all that informative and while stackoverflow is a great resource, it’s hard to find answers that are specifically tailored to the coding error I might be facing. One solution: give the problematic code to ChatGPT and ask it to identify problems.

- Explaining code: I don’t spend every day working on coding problems. Meaning each time I dust off an old analysis project I need to spend time figuring out how everything works. One solution – give ChatGPT the code and ask it to explain it to you.

- Translating code: R and Python are my languages of choice, which is fortunate because they’re both popular enough that ChatGPT can help me move between the two. Including by converting a piece of R code to its equivalent in Python.

Idea generation

ChatGPT feels like a bit of a mental shotgun at times, but sometimes when the solution isn’t clear or you’re trying to generate something novel, a mental shotgun can be a good place to start:

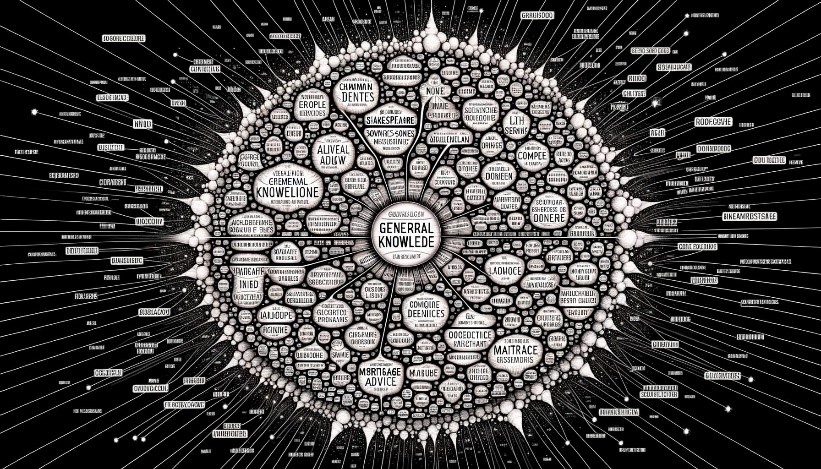

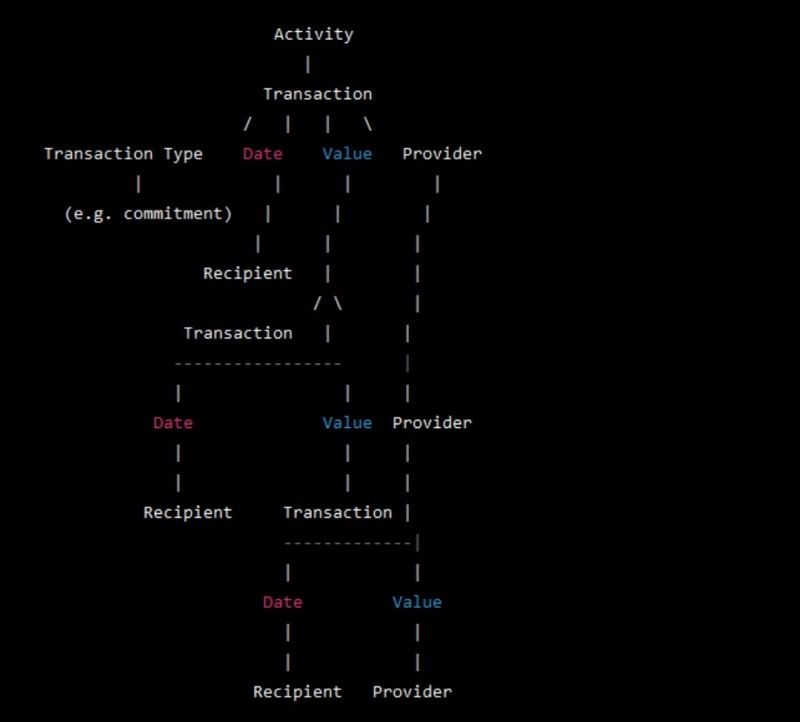

- Brainstorming: Even a stochastic parrot can be useful for generating random ideas. This has definitely been a helpful use-case with ChatGPT. Need a business name that includes Australian lingo? Have ChatGPT generate ten suggestions, tell it which ones you prefer and have it produce more. Have no idea how a well-known international aid database is structured? Ask ChatGPT to produce a diagram (pictured below).

ChatGPT’s incorrect, but helpful mapping of the IATI database structure

- Framework generation: Working across disciplines and fields means I frequently need to create my own conceptual frameworks when tackling problems for clients, which is another way I’ve found ChatGPT to be handy. As through a combination of brainstorming and ‘context caging’ you can develop and refine conceptual frameworks better suited to the task at hand.

Summarizing Text

Like search, I don’t generally suggest using ChatGPT for summarizing articles. As unless you’re already familiar with the content, it’s tough to know how accurate its summaries are. Additionally, most of the writing I read tends to come with either an executive summary up-front or an abstract. But, one way I have found ChatGPT to be useful, alongside tools like ChatPDF is as a way to conduct a ‘fuzzy’ search. Meaning that I ask ChatGPT to find sections of the document relevant to a particular concept or idea. I’ll then ask for it to return page numbers for each section of text it returns so I can read it for myself.

Text analysis, generation and translation

ChatGPT is a language model, which means it’s pretty good at doing stuff with text, including analysing, generating and transforming it:

- Generating Report Outlines: Similar to the brainstorming use-case, I’ve found ChatGPT to be handy for suggesting how a report might be structured when I have no idea where to start or am concerned, I’ve missed something. A good example of this was when I was drafting a consultancy proposal in a field I hadn’t worked in before. As you might expect the outline it generated was quite bland, but it suggested a number of areas that I might not have even considered.

- Lorem ipsum generator: I steer away from using text generated by ChatGPT when writing. Personally, I’d like to get better at writing and find that the process its useful for figuring out how to organize and articulate my thoughts. But, ChatGPT can be particularly handy when the text is more important than the process of writing it. As with a good enough prompt it’s possible to produce reasonable drafts that I can edit as necessary. Although I usually completely rewrite text produced by ChatGPT, having a rough draft to work can be a practical way to overcome writer’s block.

- Vibe Checks: Sometimes the way I write can be quite blunt, which is great for research reports, but not particularly useful when communicating with other human beings. There have been a couple of instances where I’ve used ChatGPT to help with this by asking it to soften the tone of my writing so I still communicate the necessary facts without accidentally insult the recipient.

- Interpretation and translation: For similar reasons I’m reluctant to use ChatGPT for producing summaries and research, I tread carefully when using ChatGPT for translating and interpreting text. However, it definitely has come in handy when I’m interested in simplifying technical explanations I’m trying to understand and/or make my writing more accessible. As even if I don’t accept ChatGPT’s explanation, just having the concept expressed differently can be helpful.

Data cleaning

Although ChatGPT has started to integrate data analysis functionality, I haven’t spent much time testing it out. But, I have used ChatGPT for simple, but cumbersome, data cleaning and formatting tasks. For instance, one of the analysis tasks I was provided last year required analysing information from public PDF documents. Instead of relying on OCR software or manually copying the information across, I simply pasted it into ChatGPT and asked it to output the information as a table that could be neatly pasted into Microsoft Excel (e.g. csv format).

Concluding Remarks

One of the reasons I’ve been reluctant to write about this is that the pace of change, even to ChatGPT, has been swift enough to make whatever I write obsolete soon after it’s posted. While this fact hasn’t necessarily changed, I’ve been harangued by enough people to motivate me to write something that might help them figure out whether to jump onboard the hype train (and how).

I also think I’m likely to hold a rare perspective on the use of generative AI tools, like ChatGPT, as a public policy professional. I think one reason for this is I’m an applied economist with a background in data science that has frequently tinkered with tech. Meaning I frequently find myself surrounded by smart data scientists, developers and AI enthusiasts – all of which have been obsessed about ChatGPT over the past 12 months.

I’m also an independent consultant. Resulting in me frequently tackling tasks that would normally be shared across a team and/or the host organization, such as brainstorming, project planning and business development. Having a tool like ChatGPT that can steer me in the right direction with tasks I’m new has been incredibly valuable. I’ve also been pleasantly surprised at how helpful it can be for completing tasks that I’d normally assign to a research assistant or junior colleague, such as cleaning data, producing rough drafts that I can refine and providing a reasonably intelligent series of ideas for tackling technical problems.

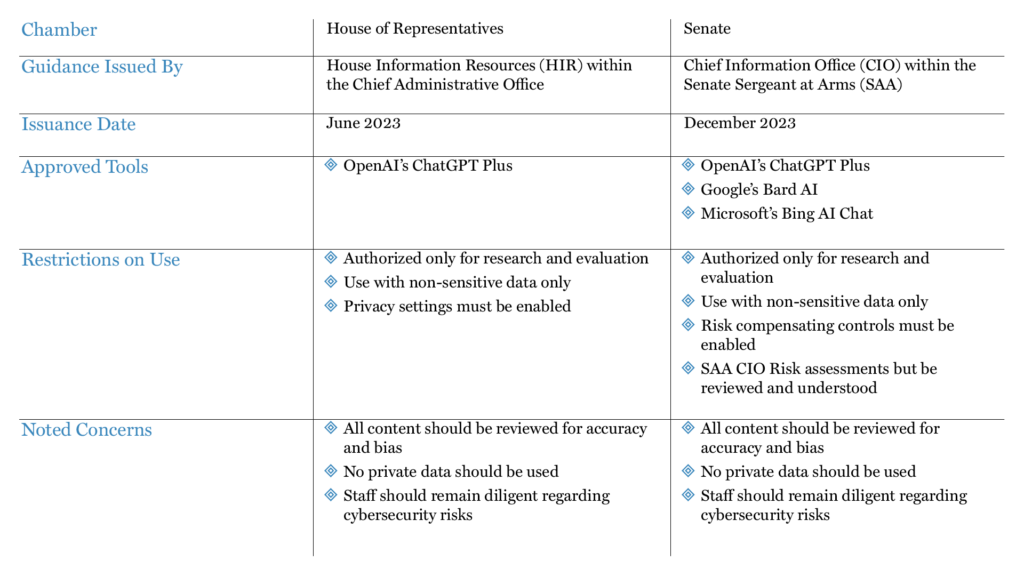

Finally, because I mainly work with government and intergovernmental bodies, I’m more risk averse than many of my friends working in tech. Both because the information I work with is often sensitive and as many of the risks of using generative AI can take on increased importance when they’re being used as input into a piece of public policy analysis. This is of course why I’ve suggested ‘moving slow and breaking things’ when starting out with ChatGPT. As the world is still figuring out what to think of AI tools like ChatGPT. I also appear to be in good company, with a number of government institutions releasing similar advice:

However, on the whole I’ve found ChatGPT to be extremely useful. Although I don’t buy that adding AI to everything is going to lead us to utopia. But, I do think there are plenty of opportunities for public policy professionals to leverage it in their work to improve both the speed and quality of the final output.

1 comment so far